CarND-Advanced-Lane-Lines

View the Project on GitHub MarkBroerkens/CarND-Advanced-Lane-Lines

The Project

The goals / steps of this project are the following:

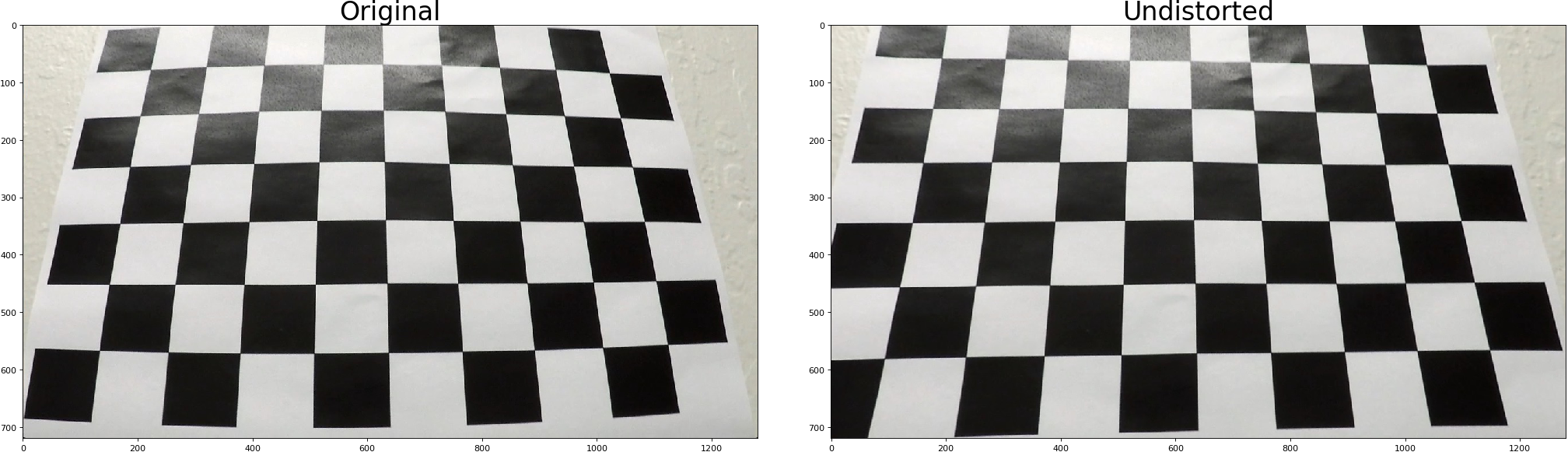

- Compute the camera calibration matrix and distortion coefficients given a set of chessboard images.

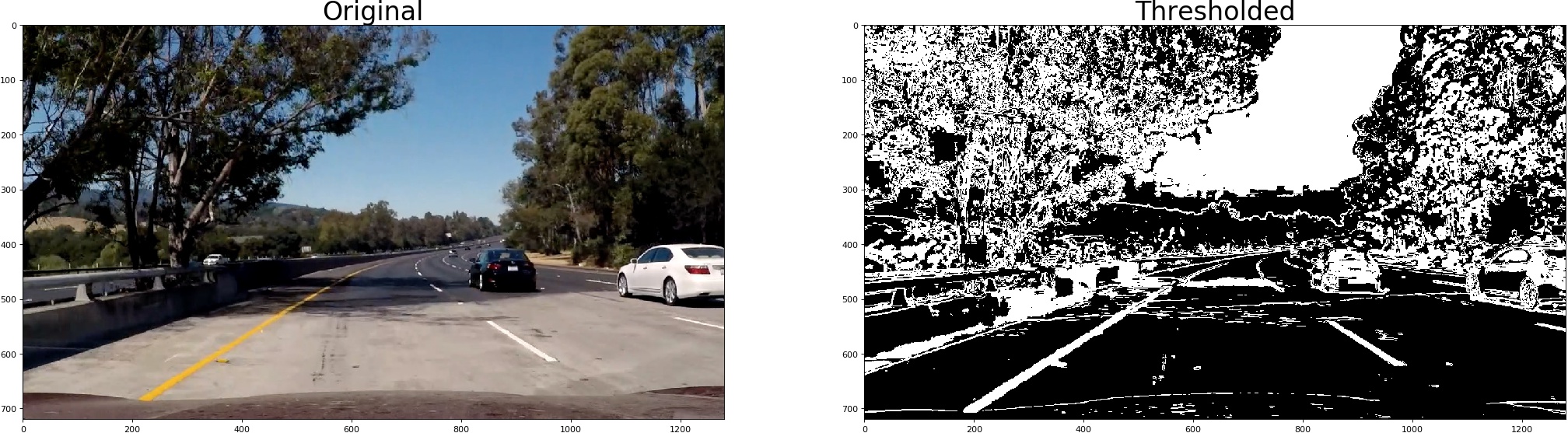

- Apply a distortion correction to raw images.

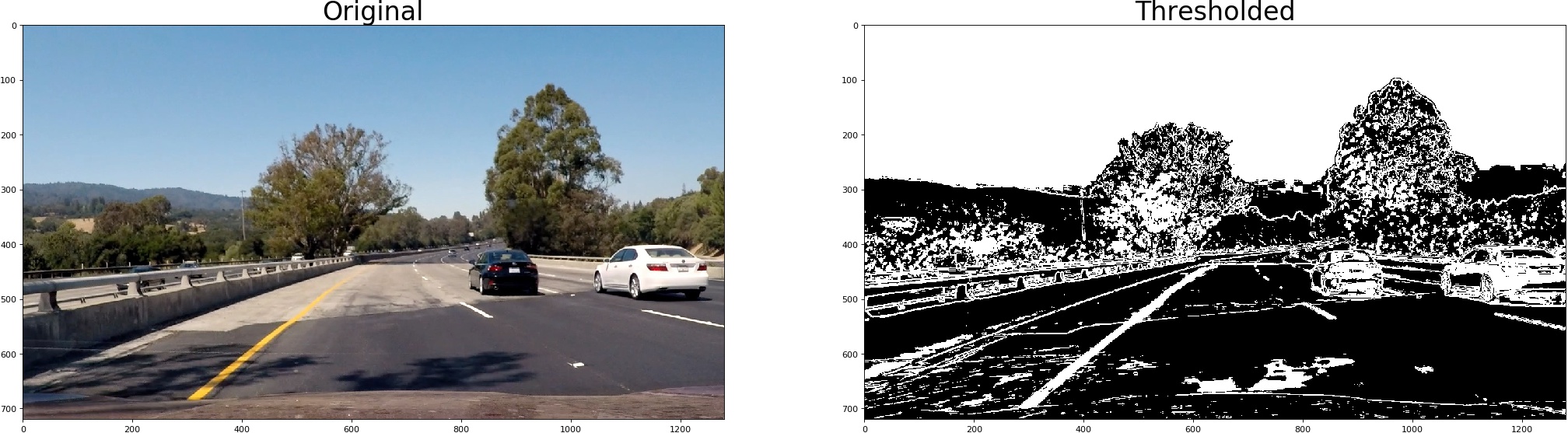

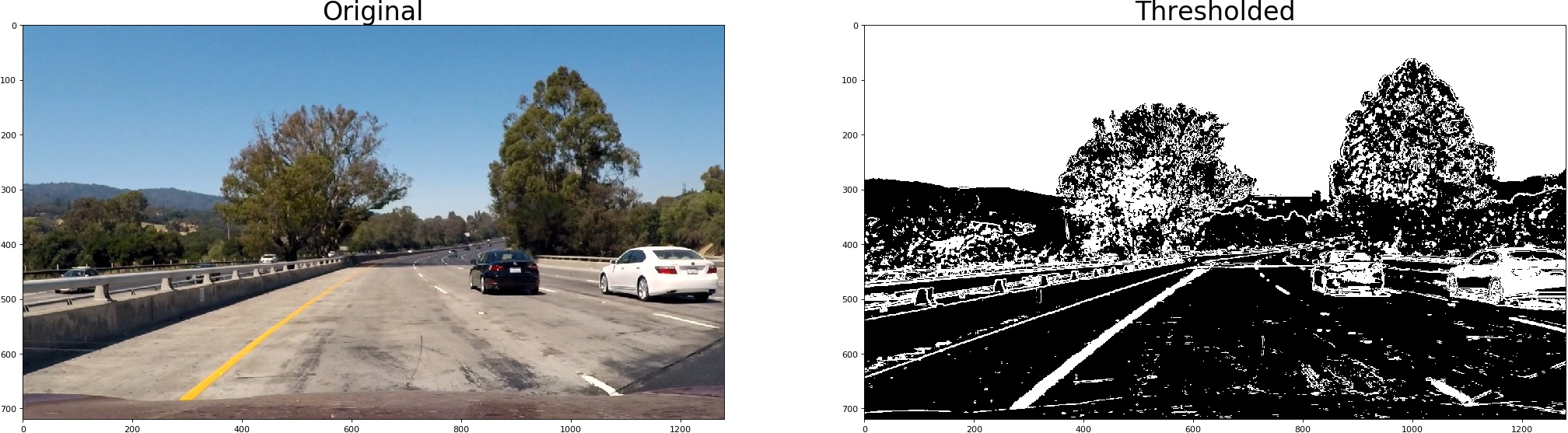

- Use color transforms, gradients, etc., to create a thresholded binary image.

- Apply a perspective transform to rectify binary image (“birds-eye view”).

- Detect lane pixels and fit to find the lane boundary.

- Determine the curvature of the lane and vehicle position with respect to center.

- Warp the detected lane boundaries back onto the original image.

- Output visual display of the lane boundaries and numerical estimation of lane curvature and vehicle position.

Overview of Files

My project includes the following files:

- README.md (writeup report) documentation of the results

- pipeline.py contains the pipeline for lane line detection

- camera_calibration.py code for calibration of the camera

- undistorter.py code for correction of distortion

- threshold.py code for calculation of thresholds

- perspective_trafo.py code for perspective transformation

- lanefinder.py code for finding and drawing lane lines

- curvatuere.py code for calculation of curvature and the position of the car within the lane lines

- image_util.py code for loading and saving images and for calculation of the visalization of original and processed images.

- project video result

- challenge video result

- lharder challenge video result

Camera Calibration

The code for this step is contained in the method calibrateCameraFromDir the file called camera_calibration.py.

I start by preparing “object points”, which will be the (x, y, z) coordinates of the chessboard corners in the world. Here I am assuming the chessboard is fixed on the (x, y) plane at z=0, such that the object points are the same for each calibration image. Thus, objp is just a replicated array of coordinates, and objpoints will be appended with a copy of it every time I successfully detect all chessboard corners in a test image. imgpoints will be appended with the (x, y) pixel position of each of the corners in the image plane with each successful chessboard detection.

I then used the output objpoints and imgpoints to compute the camera calibration and distortion coefficients using the cv2.calibrateCamera() function. I applied this distortion correction to the test image using the cv2.undistort() function and obtained this result:

Pipeline (single images)

The pipeline for single images is implemented in pipeline.py

Example of a distortion-corrected image.

The following image demonstrates the distortion corextion to a road image:

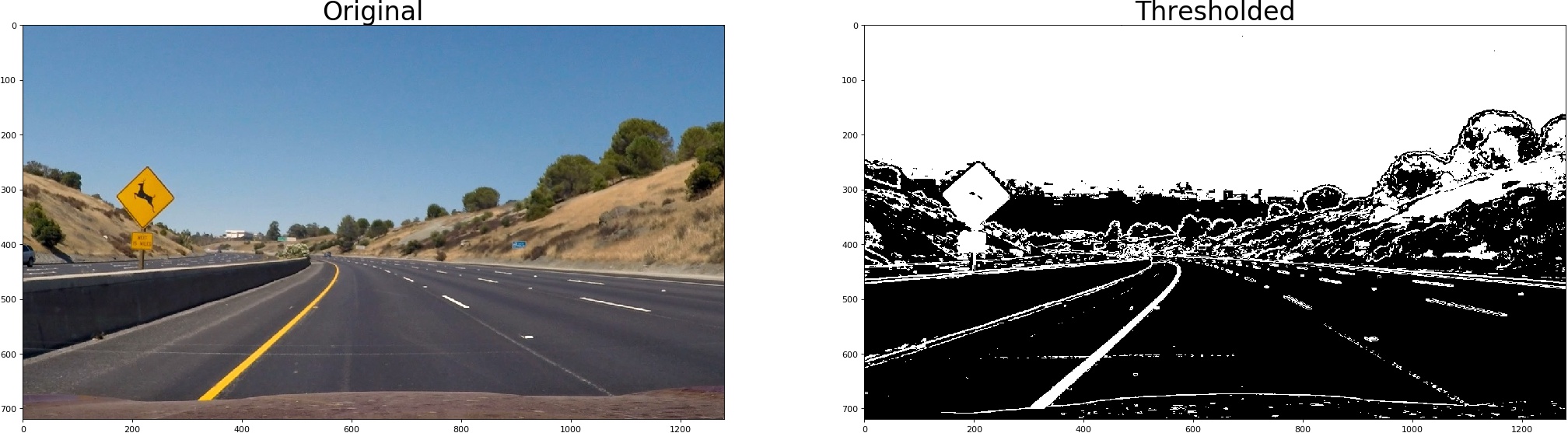

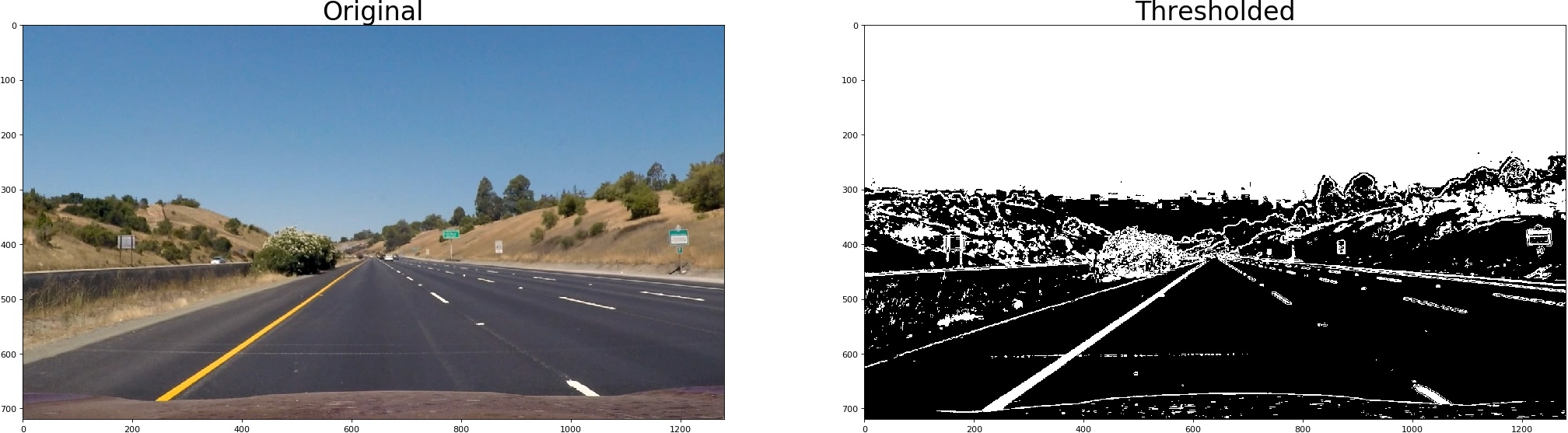

Used color transforms, gradients or other methods to create a thresholded binary image.

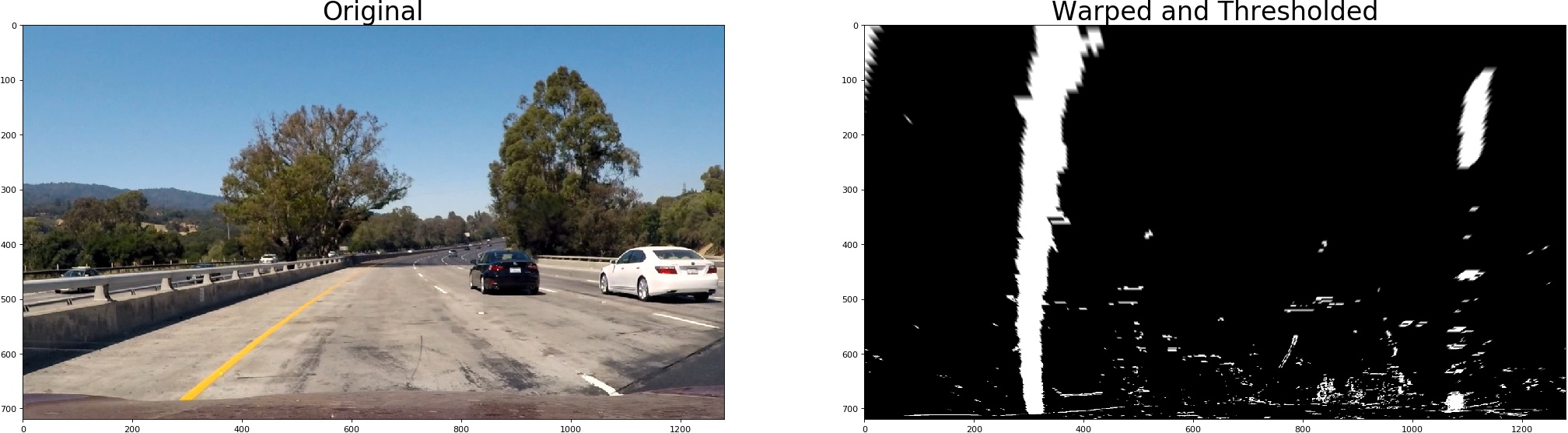

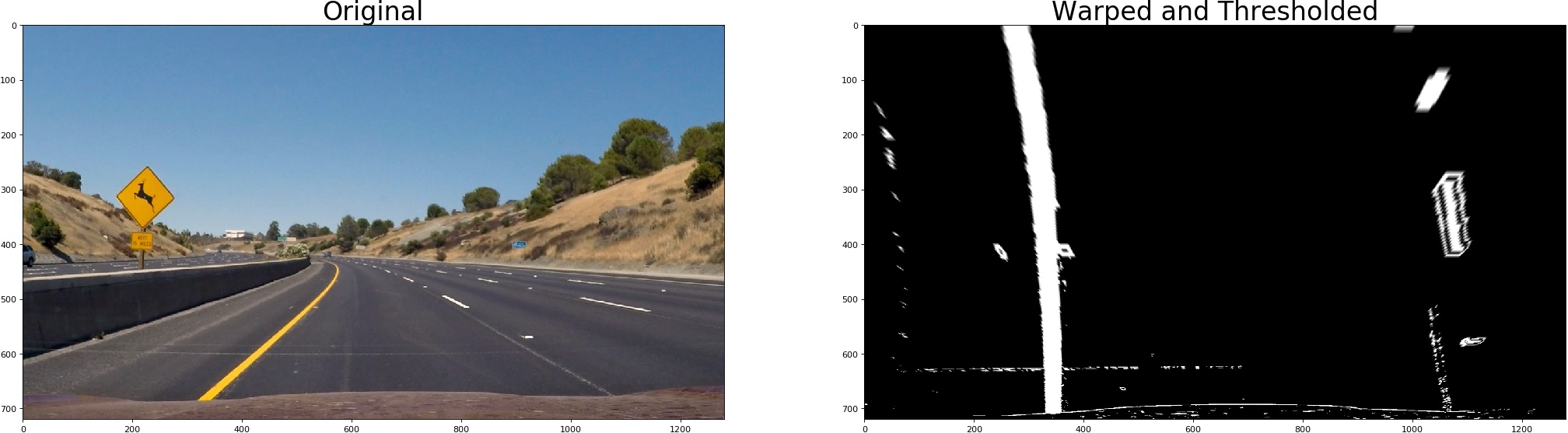

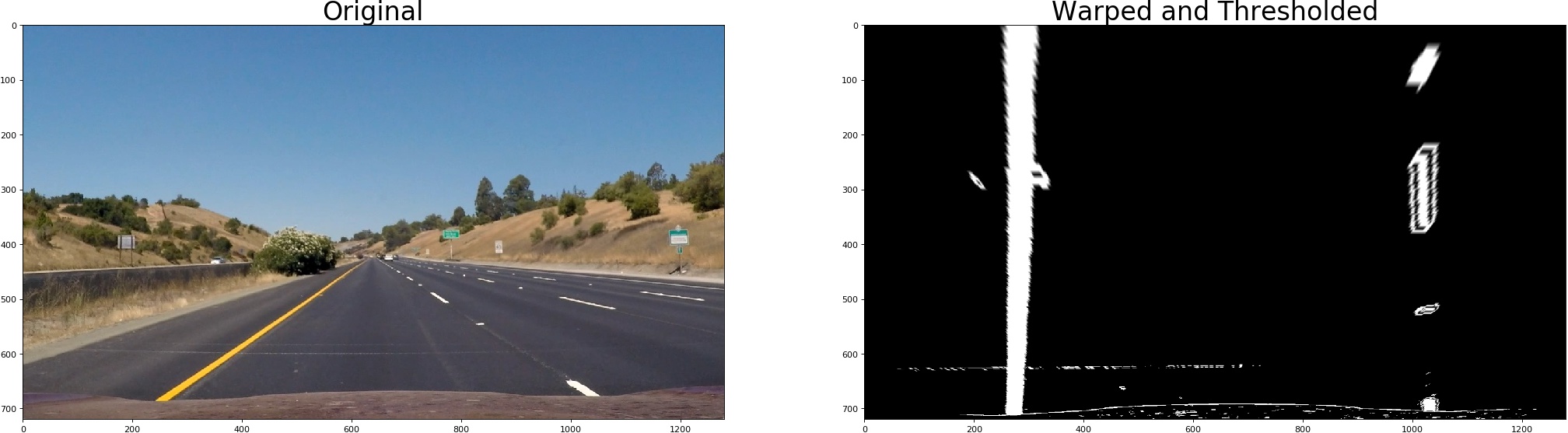

I used a combination of color and gradient thresholds to generate a binary image ( threshold.py ). Here’s an example of my output for this step.

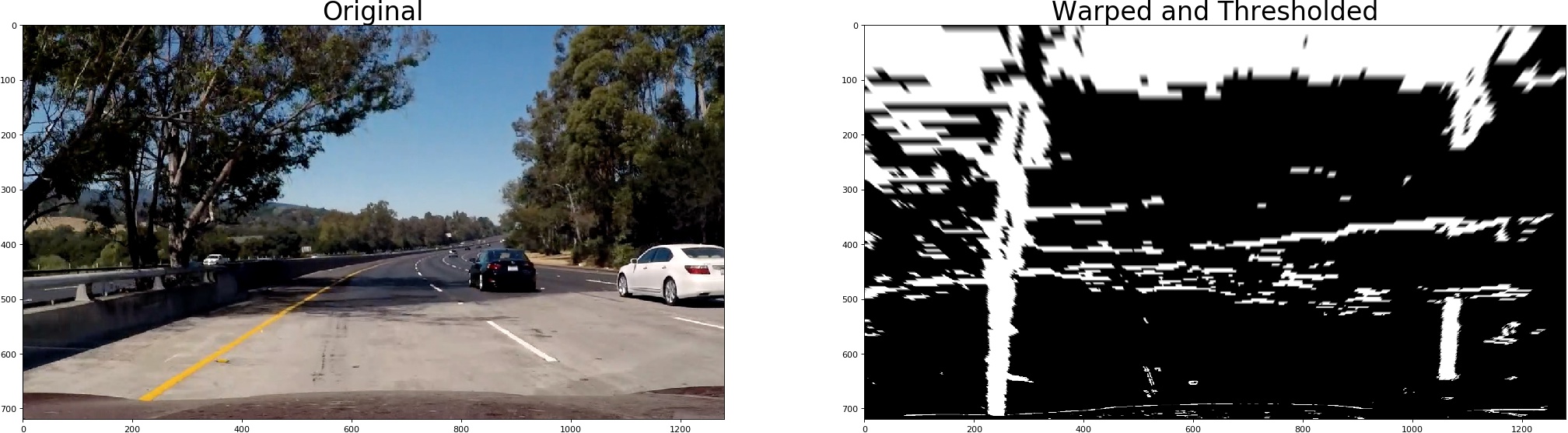

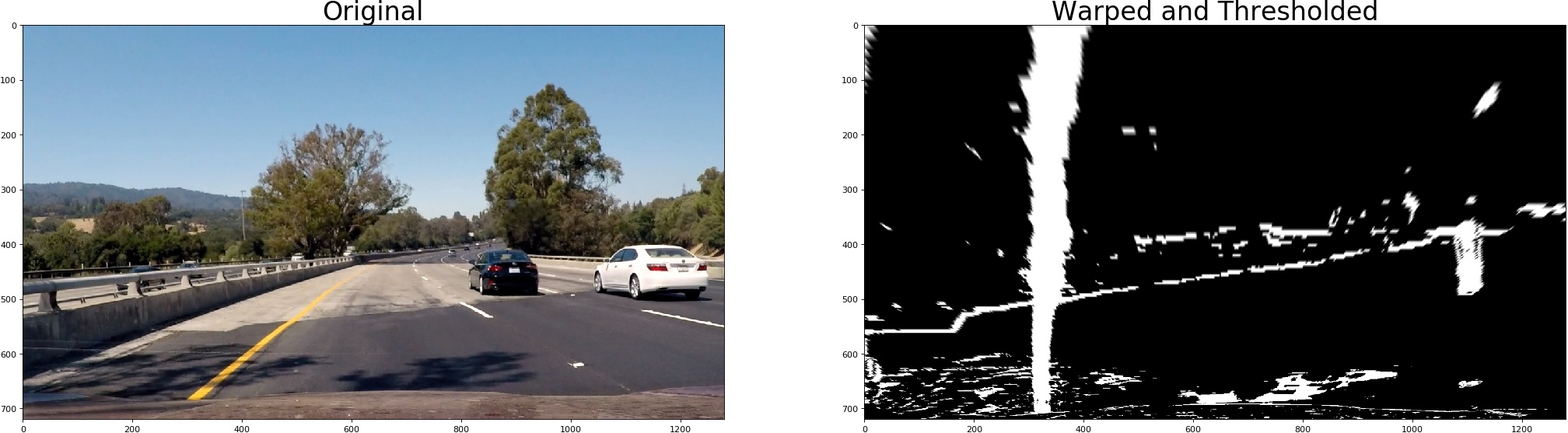

Perspective transform

The code for my perspective transform includes a function called warp(), which appears in file perspective_trafo.py. The warp() function takes as inputs an image (img), and runs cv2.warpPerspective() using the follwing source (src) and destination (dst) points. I chose the hardcode the source and destination points in the following manner:

src = np.float32(

[[(img_size[0] / 2) - 62, img_size[1] / 2 + 100],

[((img_size[0] / 6) - 10), img_size[1]],

[(img_size[0] * 5 / 6) + 60, img_size[1]],

[(img_size[0] / 2 + 62), img_size[1] / 2 + 100]])

dst = np.float32(

[[(img_size[0] / 4), 0],

[(img_size[0] / 4), img_size[1]],

[(img_size[0] * 3 / 4), img_size[1]],

[(img_size[0] * 3 / 4), 0]])

This resulted in the following source and destination points:

| Source | Destination |

|---|---|

| 578, 460 | 320, 0 |

| 203, 720 | 320, 720 |

| 1127, 720 | 960, 720 |

| 702, 460 | 960, 0 |

I verified that my perspective transform was working as expected by drawing the src and dst points onto a test image and its warped counterpart to verify that the lines appear parallel in the warped image.

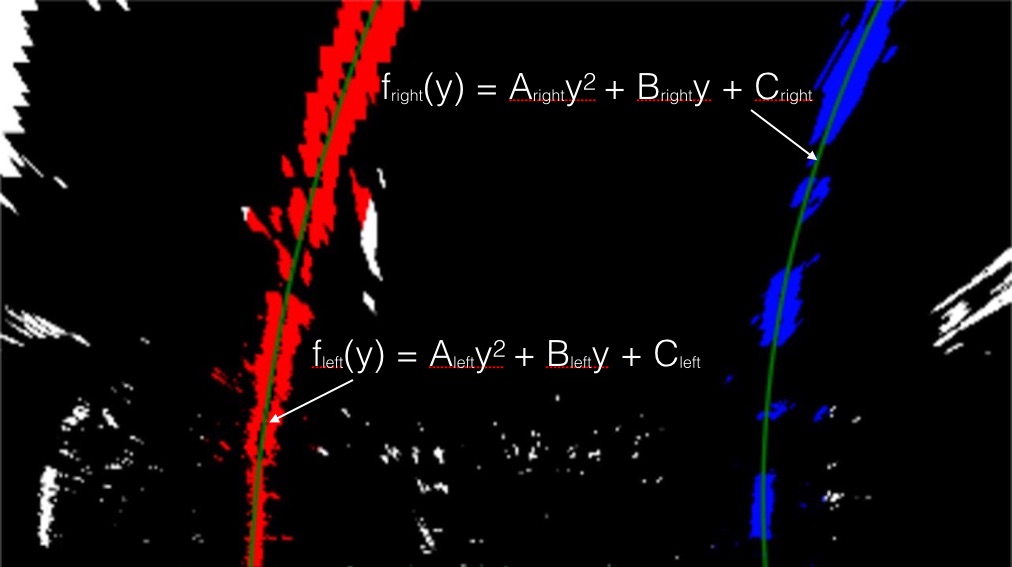

Identifying lane-line pixels and fitting their positions with a polynomial

The detection of lane-lines starts with searching for peaks in the histogramm in the bottom part of the thresholded and warped images. The identified peaks are used as the starting point for following the line using the sliding window approach. (lanefinder.py, function findLanes1st().

After the initial line is detected, we can continue searching for the new location of the lane line starting in the area where the current line was detected. (lanefinder.py, function findLanesNext()

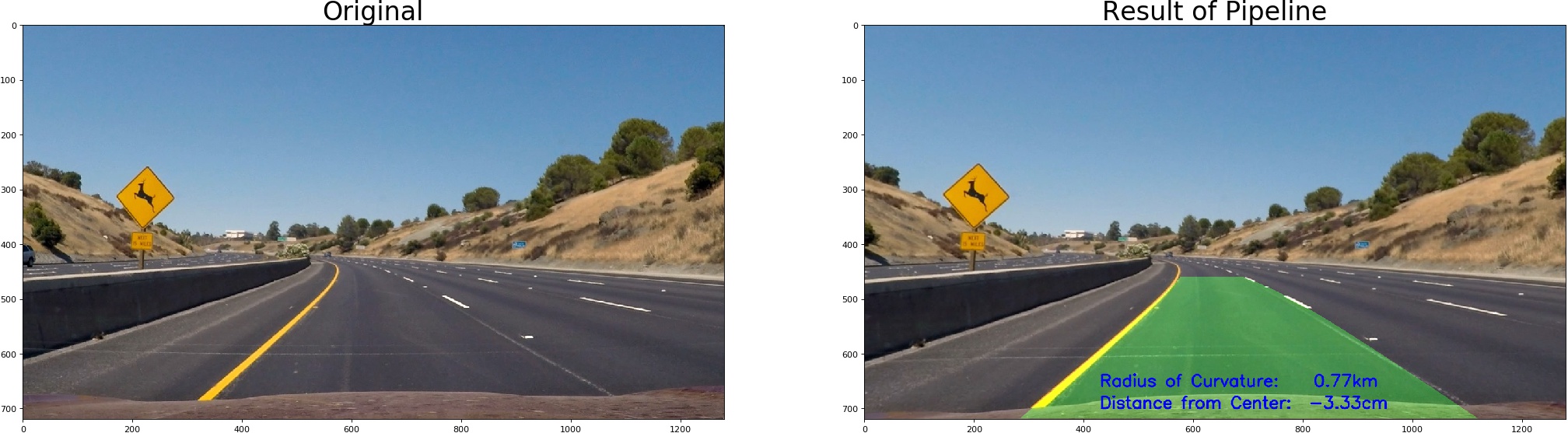

Calculated the radius of curvature of the lane and the position of the vehicle with respect to center.

I did this in functions curvature() and lanepos() in curvature.py

Example image of result plotted back down onto the road such that the lane area is identified clearly.

I implemented this step in lines 40 to 48 in my code in pipeline.py and in lanefinder.py in the function draw(). Here is an example of my result on a test image:

Pipeline (video)

The creation of the final video is implemented in videoprocess.py

Final video output

- Here’s a link to my project video result

- Here’s a link to my challenge video result

- Here’s a link to my harder challenge video result

Discussion

During the implementation of this project I found it very useful to make use of python unittests in order to test the code and in order to calculate and evaluate differnt parameters, e.g. in the context of thresholding. The chosen approach worked pretty well for all three test videos. However, it had some problems in case the surface of the street changes, there is much shadow on the road, lane lines are missing, etc.

Improvements could be:

- consider provious polynoms that were fitted onto the lane lines in order to smoothen lane line detection

- add sanity checks that kick out values that do nor make sense. In that case the previous polynoms could be reused.

- the values of the curvature and the position of the car are hardly readable since they change so often. Less frequent update of the values and calculation of the average over several frames of the video could help.

- In order to reduce effort for calculation of the thresholds, etc. the images reduced to the area of interest (the street only)

- There might be a better values for min and max thresholds of the threshold calculation. More finetuning might produce even better results.

Further Reading

Please, the links below can be of great help for more information on camera calibration: